At the core of QBit, microservices lib, is the queue. The QBit microservice lib centers aroundqueues and micro-batching. A queue is a stream of messages. Micro-batching is employed to improve throughput by reducing thread handoffs, and improving IO efficiency. You can implement back pressure in QBit. However, reactive streams, has a very clean interface for implementing back pressure. Increasingly APIs for new SQL databases, data grids, messaging, are using some sort of streaming API.

If you are using Apache Spark, or Kafka or Apache Storm or Cassandra, then you are likely already familiar with streaming APIs. Java 8 ships with a streaming API and Java 9 improves on the concepts with Flow. Streams are coming to you one way or another if you are a Java programmer.

There are even attempts to make a base level stream API for compatibility sakes, called Reactive Streams.

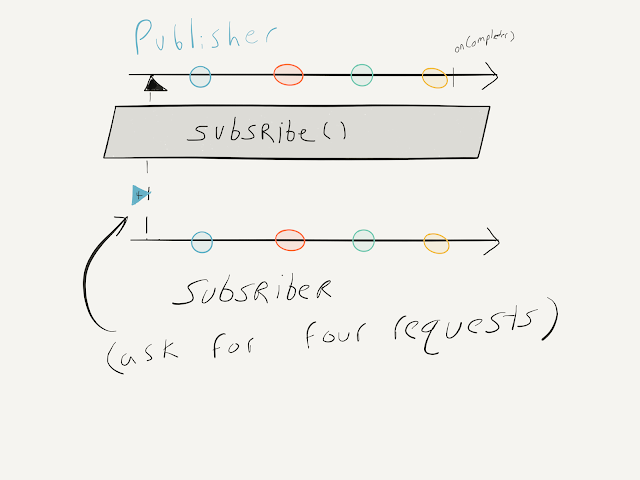

Reactive Streams is an initiative to provide a standard for asynchronous stream processing with non-blocking back pressure. This encompasses efforts aimed at runtime environments (JVM and JavaScript) as well as network protocols.

Realizing the importance of streaming standards and the ability to integrate into a larger ecosystem, QBit now supports reactive streams and will support it more as time goes on.

QBit endeavors to make more and more things look like QBit queues which is a convenient interface for dealing with IO, interprocess and inter-thread communication with speeds up to 100M TPS to 200M TPS.

We have added two classes to adapt a QBit queue to a stream. Later there will be classes to adapte a stream to a queue. The two classes are:

QueueToStreamRoundRobin and QueueToStreamUnicast. The overhead of backpressure support is around 20 to 30%. There are more QBit centric ways of implementing back pressure that will have less overhead, but in the grand scheme of thing this overhead is quite small. QueueToStreamRoundRobin and QueueToStreamUnicast have been clocked between 70M TPS and 80M TPS with the advantage of easily handling resource consumption management so that a fast data source does not overwhelm the stream destination. QueueToStreamRoundRobin allows many reactive stream destinations from the same QBit queue.Let's see these two in action:

Simple trade class

publicclassTrade {

finalString name;

finallong amount;

privateTrade(Stringname, longamount) {

this.name = name;

this.amount = amount;

}

...

}Adapting a Queue into a Reactive Stream

finalQueue<Trade> queue =

QueueBuilder

.queueBuilder()

.build();

/* Adapt the queue to a stream. */

finalQueueToStreamUnicast<Trade> stream =

newQueueToStreamUnicast<>(queue);

stream.subscribe(newSubscriber<Trade>() {

privateSubscription subscription;

privateint count;

@Override

publicvoidonSubscribe(Subscriptions) {

this.subscription = s;

s.request(10);

}

@Override

publicvoidonNext(Tradetrade) {

//Do something useful with the trade

count++;

if (count >9) {

count =0;

subscription.request(10);

} else {

count++;

}

}

@Override

publicvoidonError(Throwablet) {

error.set(t);

}

@Override

publicvoidonComplete() {

/* shut down. */

}

});

/* Send some sample trades. */

finalSendQueue<Trade> tradeSendQueue = queue.sendQueue();

for (int index =0; index <20; index++) {

tradeSendQueue.send(newTrade("TESLA", 100L+ index));

}

tradeSendQueue.flushSends();For the multicast version, here is a simple unit test showing how it works.

Unit test showing how mulitcast works

packageio.advantageous.qbit.stream;

importio.advantageous.boon.core.Sys;

importio.advantageous.qbit.queue.Queue;

importio.advantageous.qbit.queue.QueueBuilder;

importio.advantageous.qbit.queue.SendQueue;

importorg.junit.Test;

importorg.reactivestreams.Subscriber;

importorg.reactivestreams.Subscription;

importjava.util.concurrent.CopyOnWriteArrayList;

importjava.util.concurrent.CountDownLatch;

importjava.util.concurrent.TimeUnit;

importjava.util.concurrent.atomic.AtomicBoolean;

importjava.util.concurrent.atomic.AtomicReference;

import staticorg.junit.Assert.assertEquals;

import staticorg.junit.Assert.assertNotNull;

publicclassQueueToStreamMulticast {

privateclassTrade {

finalString name;

finallong amount;

privateTrade(Stringname, longamount) {

this.name = name;

this.amount = amount;

}

}

@Test

publicvoidtest() throwsInterruptedException {

finalQueue<Trade> queue =QueueBuilder.queueBuilder().build();

finalQueueToStreamRoundRobin<Trade> stream =newQueueToStreamRoundRobin<>(queue);

finalCopyOnWriteArrayList<Trade> trades =newCopyOnWriteArrayList<>();

finalAtomicBoolean stop =newAtomicBoolean();

finalAtomicReference<Throwable> error =newAtomicReference<>();

finalAtomicReference<Subscription> subscription =newAtomicReference<>();

finalCountDownLatch latch =newCountDownLatch(1);

finalCountDownLatch stopLatch =newCountDownLatch(1);

stream.subscribe(newSubscriber<Trade>() {

@Override

publicvoidonSubscribe(Subscriptions) {

s.request(10);

subscription.set(s);

}

@Override

publicvoidonNext(Tradetrade) {

trades.add(trade);

if (trades.size()==10) {

latch.countDown();

}

}

@Override

publicvoidonError(Throwablet) {

error.set(t);

}

@Override

publicvoidonComplete() {

stop.set(true);

stopLatch.countDown();

}

});

finalSendQueue<Trade> tradeSendQueue = queue.sendQueue();

for (int index =0; index <100; index++) {

tradeSendQueue.send(newTrade("TESLA", 100L+ index));

}

tradeSendQueue.flushSends();

Sys.sleep(100);

latch.await(10, TimeUnit.SECONDS);

assertEquals(10, trades.size());

assertEquals(false, stop.get());

assertNotNull(subscription.get());

subscription.get().cancel();

stopLatch.await(10, TimeUnit.SECONDS);

assertEquals(true, stop.get());

}

@Test

publicvoidtest2Subscribe() throwsInterruptedException {

finalQueue<Trade> queue =QueueBuilder.queueBuilder().setBatchSize(5).build();

finalQueueToStreamRoundRobin<Trade> stream =newQueueToStreamRoundRobin<>(queue);

finalCopyOnWriteArrayList<Trade> trades =newCopyOnWriteArrayList<>();

finalAtomicBoolean stop =newAtomicBoolean();

finalAtomicReference<Throwable> error =newAtomicReference<>();

finalAtomicReference<Subscription> subscription =newAtomicReference<>();

finalCountDownLatch latch =newCountDownLatch(1);

finalCountDownLatch stopLatch =newCountDownLatch(1);

stream.subscribe(newSubscriber<Trade>() {

@Override

publicvoidonSubscribe(Subscriptions) {

s.request(10);

subscription.set(s);

}

@Override

publicvoidonNext(Tradetrade) {

trades.add(trade);

if (trades.size()==20) {

latch.countDown();

}

}

@Override

publicvoidonError(Throwablet) {

error.set(t);

}

@Override

publicvoidonComplete() {

stop.set(true);

stopLatch.countDown();

}

});

stream.subscribe(newSubscriber<Trade>() {

@Override

publicvoidonSubscribe(Subscriptions) {

s.request(10);

subscription.set(s);

}

@Override

publicvoidonNext(Tradetrade) {

trades.add(trade);

if (trades.size()==20) {

latch.countDown();

}

}

@Override

publicvoidonError(Throwablet) {

error.set(t);

}

@Override

publicvoidonComplete() {

stop.set(true);

stopLatch.countDown();

}

});

finalSendQueue<Trade> tradeSendQueue = queue.sendQueue();

for (int index =0; index <40; index++) {

tradeSendQueue.send(newTrade("TESLA", 100L+ index));

}

tradeSendQueue.flushSends();

Sys.sleep(100);

latch.await(10, TimeUnit.SECONDS);

assertEquals(20, trades.size());

assertEquals(false, stop.get());

assertNotNull(subscription.get());

subscription.get().cancel();

stopLatch.await(10, TimeUnit.SECONDS);

assertEquals(true, stop.get());

}

}

QBit has support for a Queue API that works over Kafka, JMS, WebSocket and in-memory queues. You can now use reactive streams with these queues and the performance is very good.